Never Changing Deepseek Chatgpt Will Eventually Destroy You

페이지 정보

본문

Coupled with superior cross-node communication kernels that optimize knowledge transfer through high-velocity technologies like InfiniBand and NVLink, this framework allows the model to achieve a consistent computation-to-communication ratio even because the mannequin scales. Just like the scrutiny that led to TikTok bans, worries about knowledge storage in China and potential government entry raise purple flags. While China is the biggest mobile app market for DeepSeek Chat in the present day, it represents only 23% of its total downloads, in keeping with Sensor Tower. While OpenAI and different established players still hold vital market share, the emergence of challengers like DeepSeek signals an exciting period for artificial intelligence - one where effectivity and accessibility matter just as a lot as energy. Thanks to geopolitical elements like U.S. In fact, these had been the strictest controls in all the October 7 bundle because they legally prevented U.S. With U.S. export restrictions limiting access to advanced chips, many predicted that Chinese AI development would face vital setbacks. DeepSeek is part of a growing development of Chinese companies contributing to the worldwide open-source AI movement, countering perceptions that China's tech sector is primarily targeted on imitation slightly than innovation.

Coupled with superior cross-node communication kernels that optimize knowledge transfer through high-velocity technologies like InfiniBand and NVLink, this framework allows the model to achieve a consistent computation-to-communication ratio even because the mannequin scales. Just like the scrutiny that led to TikTok bans, worries about knowledge storage in China and potential government entry raise purple flags. While China is the biggest mobile app market for DeepSeek Chat in the present day, it represents only 23% of its total downloads, in keeping with Sensor Tower. While OpenAI and different established players still hold vital market share, the emergence of challengers like DeepSeek signals an exciting period for artificial intelligence - one where effectivity and accessibility matter just as a lot as energy. Thanks to geopolitical elements like U.S. In fact, these had been the strictest controls in all the October 7 bundle because they legally prevented U.S. With U.S. export restrictions limiting access to advanced chips, many predicted that Chinese AI development would face vital setbacks. DeepSeek is part of a growing development of Chinese companies contributing to the worldwide open-source AI movement, countering perceptions that China's tech sector is primarily targeted on imitation slightly than innovation.

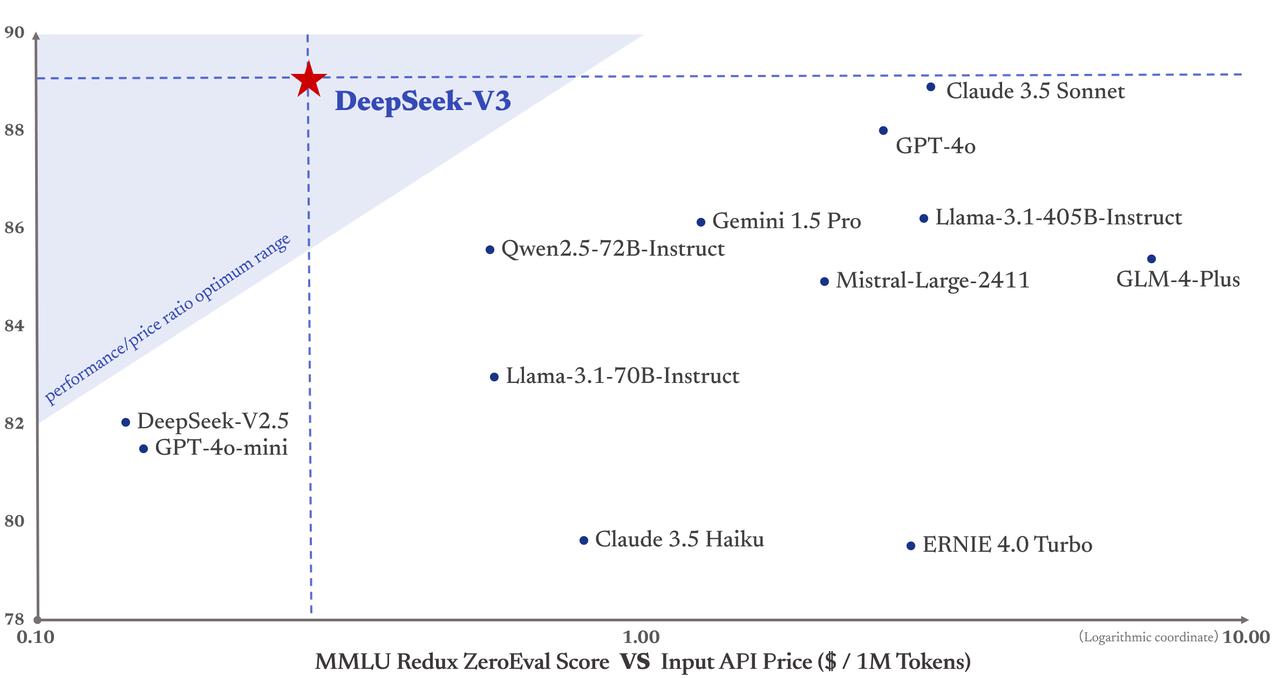

One such contender is DeepSeek, a Chinese AI startup that has quickly positioned itself as a serious competitor in the global AI race. In case you are on the web, you'd have definitely crossed paths with one AI service or one other. Chances are high that you are reading this article’s summary via an AI service. In essence, DeepSeek V3 and ChatGPT are inclined to service different goal audiences and numerous use cases. Use Codestral in your favorite coding and building setting. We had additionally identified that using LLMs to extract functions wasn’t particularly dependable, so we modified our strategy for extracting features to make use of tree-sitter, a code parsing instrument which might programmatically extract functions from a file. This open-supply approach might have ripple effects throughout the AI business. Instead, corporations like DeepSeek have showcased how innovation and strategic design can overcome these obstacles. Features like Function Calling, FIM completion, and JSON output remain unchanged. 0.28 per million output. DeepSeek has attracted consideration in global AI circles after writing in a paper in December 2024 that the training of DeepSeek-V3 required lower than $6 million worth of computing energy from Nvidia H800 chips. All included, costs for building a chopping-edge AI mannequin can soar as much as US$100 million.

One such contender is DeepSeek, a Chinese AI startup that has quickly positioned itself as a serious competitor in the global AI race. In case you are on the web, you'd have definitely crossed paths with one AI service or one other. Chances are high that you are reading this article’s summary via an AI service. In essence, DeepSeek V3 and ChatGPT are inclined to service different goal audiences and numerous use cases. Use Codestral in your favorite coding and building setting. We had additionally identified that using LLMs to extract functions wasn’t particularly dependable, so we modified our strategy for extracting features to make use of tree-sitter, a code parsing instrument which might programmatically extract functions from a file. This open-supply approach might have ripple effects throughout the AI business. Instead, corporations like DeepSeek have showcased how innovation and strategic design can overcome these obstacles. Features like Function Calling, FIM completion, and JSON output remain unchanged. 0.28 per million output. DeepSeek has attracted consideration in global AI circles after writing in a paper in December 2024 that the training of DeepSeek-V3 required lower than $6 million worth of computing energy from Nvidia H800 chips. All included, costs for building a chopping-edge AI mannequin can soar as much as US$100 million.

Imagine a world the place developers can tweak DeepSeek-V3 for area of interest industries, from personalised healthcare AI to instructional instruments designed for particular demographics. This drastic worth distinction might make AI tools more accessible to smaller companies, startups, and even hobbyists, who might’ve beforehand been priced out of leveraging superior AI capabilities. Woyera helps businesses build AI-powered chatbots and automation instruments to streamline workflows and enhance efficiency. It challenges long-standing assumptions about what it takes to build a aggressive AI model. Instead, the announcement came inside per week of OpenAI’s demonstration of o3, a new model that will rank in the 99.9th percentile of all competitive coders and could correctly solve the world’s hardest math problems at 10 instances the speed of its predecessor. In benchmark tests, it performs on par with heavyweights like OpenAI’s GPT-4o, which isn't any small feat. This design isn’t just about saving computational energy - it additionally enhances the model’s ability to handle complicated tasks like advanced coding, mathematical reasoning, and nuanced downside-solving. This enhancement permits it to deal with more complicated coding challenges effectively.

It has been disappointing to watch the foundational mannequin research turn into an increasing number of closed over the previous couple of years. By creating a mannequin that sidesteps hardware dependencies, the company is showing how innovation can flourish even in difficult circumstances. DeepSeek-V3 is a prime example of how fresh ideas and intelligent strategies can shake up even the most aggressive industries. Apple actually closed up yesterday, as a result of DeepSeek is brilliant news for the company - it’s proof that the "Apple Intelligence" guess, that we will run good enough local AI fashions on our phones could truly work in the future. Then finished with a discussion about how some research may not be ethical, or it could be used to create malware (after all) or do artificial bio analysis for pathogens (whoops), or how AI papers would possibly overload reviewers, though one would possibly suggest that the reviewers aren't any higher than the AI reviewer anyway, so… We have been cautious of constructing this ourselves, however in the future we stumbled upon Asad Memon’s codemirror-copilot, and hooked it up. The property administration at its constructing ushered all uninvited guests to a room to turn down their requests for a visit. While many firms keep their AI fashions locked up behind proprietary licenses, DeepSeek has taken a daring step by releasing DeepSeek-V3 below the MIT license.

- 이전글7 Simple Changes That Will Make A Big Difference With Your Gotogel 25.03.02

- 다음글Ptsd Vietnam - Is There Still Time For Heal Via Ptsd? 25.03.02

댓글목록

등록된 댓글이 없습니다.